The Journey Begins: 17 Educators Take Their First Steps with AI

On Wednesday, October 8, 2025, Lead by Learning hosted the first session of their 2025 Pilot Community of Practice: AI Together. Seventeen educators from the Oakland Unified School District, each navigating their own unique classroom challenges, gathered together for the first time, united by a single question: How could AI help their students learn?

In search of this answer, Lead by Learning is partnering with Northeastern University to put together a 4-part Community of Practice (CoP). These sessions are co-led by Northeastern Professor, Dr. Rasika Bhalerao, co-op students Dylan Kao, Lucy Paolini and Jerry Wang, and Lead by Learning facilitators. With their combined expertise in computer science, AI ethics, psychology, and adult learning, the team brings an interdisciplinary perspective to help educators navigate complex topics surrounding AI usage and ethics.

During our Warm Welcome, educators were asked to share a specific dilemma they were holding about their students. Their responses included how they could use AI in classrooms where students had a large range of abilities and how they could use it to give personalized feedback. Despite their different backgrounds, different schools, different grades, and different subjects, they all shared something important: curiosity.

But, curiosity came with concerns. Some concerns included:

- Ensuring AI is supporting learning rather than replacing it

- Preventing student plagiarism

- Using AI to help solve problems about students while keeping their privacy

These concerns and questions built the foundation of our exploration.

Understanding The Basics

Before anyone could move forward, educators needed to understand what they were actually working with. Northeastern Professor Dr. Rasika Bhalerao started at the beginning: “What is AI?”

Using computer scientist Andrew Ng’s definition, “the science of getting computers to act without being explicitly programmed”, the session began unraveling the mystery. Large Language Models, they learned, were essentially prediction machines, trained on vast swaths of internet text to guess what word should come next.

But here’s the catch: these models could make mistakes. They could reproduce the biases baked into their training data. Understanding this wasn’t just academic; it was essential for using AI responsibly with students.

Learning to Speak AI’s Language

After the foundation of what AI is was established, co-op student Dylan Kao introduced the art of prompt engineering, essentially, learning to communicate effectively with AI.

Dylan shared, ”AI is inherently stupid—don’t leave things up for interpretation.” This brought up the point that AI needs clear, specific instructions.

The educators learned four key principles:

- Context matters. Tell the AI who you are, what you teach, and what your students need, but skip demographic details that might trigger bias.

- Be specific. Don’t dance around what you want. Say it clearly.

- State your task directly. “Make this better” won’t cut it. Instead, AI needs directions like: “Revise this paragraph for clarity and add specific examples of …”

- Specify the format. Want a rubric? A lesson plan? A list of questions? Tell the AI exactly how to structure its response.

Experimentation Time

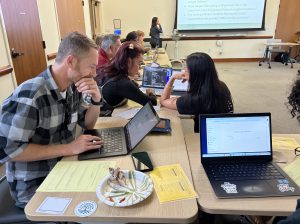

Then came a very exciting moment: hands-on time. Educators had the chance to pull out their devices and play around with their own prompts on their preferred AI platforms, including ChatGPT, Claude, and Gemini. At this moment, the room transformed from a lecture to a playground.

Teacher Collaboration Time

As in all Lead by Learning collaborative spaces, educators were provided time to “think alone” before thinking together. This was their opportunity to apply their learning to their contexts. They were asked to reflect on:

- What happened?

- Did the prompt generate what you envisioned?

- If not, how can we get it there?

- How are you then going to implement this in the classroom?

- How is it going to help you support students?

- Are there particular students who would be best supported by this prompt? Why?

One educator expressed that, “I learned about AI, generative AI, and what type of specific instructions to give to get a better result. I gave it a prompt, and I got a response.” They also shared that the prompt generation matched what they envisioned, and they have plans to use this in the classroom: “I might use it in my classroom for my students to learn about current components of green buildings because they change very often.”

This educator walked away with next steps to use AI prompt generation to support, “Students who struggle with research or do not know where to go to find specific information.”

Next Steps

The educators went home after the session with concrete homework:

- Try their prompts with real students.

- Revise based on what happened.

- Return to the next meeting in November with student data and stories based on what they learned and tried.

Teachers were also curious about AI ethics. They brought up questions about bias and how to protect student data when using AI in the classroom. Future CoP sessions will address these curiosities.