Moving from Hesitance to Learning: The Power of a Community of Practice to Challenge AI Assumptions

When Lead by Learning launched AI Together, a community of practice for K-12 Oakland Unified School District educators, we weren’t sure exactly what direction the work would take; only that teachers needed a space to explore AI safely, critically, and creatively. Three months later, what has emerged is a story of professional growth: educators who arrived with hesitations or unfamiliarity are now developing nuanced, confident, and deeply thoughtful approaches to using AI in their classrooms.

One of the most consistent themes across educator reflections was the shift from uncertainty to a sense of agency. Many teachers started in the fall unsure of where AI belonged in their work, or whether it belonged at all.

“In October, I wasn’t engaging with AI at all,” one teacher shared. “There was a lot of apprehension about ethics, the ways it was being used, and especially student privacy.” As educators began experimenting while being supported by the Lead by Learning team, perceptions began to shift. Learning about key AI usage skills such as prompt engineering, ethical usage, and privacy, educators had a safe space to learn and explore the usage of AI: “The shift for me was realizing that you have to play with AI to understand it. Once I did that, I started to see the possibilities.”

Between sessions, educators were encouraged to apply what they were learning in any way that felt meaningful to their classroom. The range of applications was astonishing:

- Designing practice stations for fifth graders to strengthen sentence structure

- Analyzing student transcripts to identify graduation pathways

- Collaborating with AI to generate multimodal assessments to ensure students understood material rather than chasing a grade

- Using AI as a drafting partner for rubrics, emails, and lesson plans

One teacher described a breakthrough moment while grading essays. They fed a set of anonymized fifth-grade paragraphs into their AI of choice, along with the rubric. Within minutes, the AI identified strong and weak points in each student’s essay and was even able to develop a personalized study plan for each student. “What usually takes me over an hour took three minutes,” the teacher said. “It didn’t replace my judgment, but it cut my labor in half and gave me time back to actually support students.”

Others were surprised by how generative AI expanded their instructional creativity. “I tried the slideshow feature and was shocked,” one educator said. “It came up with examples I wouldn’t have thought of on my own.”

Across the board, teachers emphasized that experimenting with AI helped them understand its limits as well as its possibilities. One noted, “Using AI in real ways helped me see where I need to be careful; I’m more comfortable, and I actually know what it’s good for versus what it’s not, and the only real way to do that is to experiment with it.”

Regarding their concerns to ethical usage of AI, while many still had concerns about AI usage, their concerns had evolved: “In October, I was concerned about the big moral questions, such as water usage,” a teacher said. “Now I’m moving into the more practical questions, such as how do I protect my students’ data? How do I keep bias out of the outputs? How do I use this responsibly?” Another shared: “I’m still concerned about bias. But now that I understand how prompts shape responses, I feel more empowered to be part of that conversation.”

Several educators described learning how AI can reinforce inequities if not prompted thoughtfully. This led to discussions about how to avoid unintended amplification of race, socioeconomic status, gender, or ability in AI-generated feedback.

One teacher described the challenge this way: “Asking AI to ‘just not be racist or biased’ is like telling someone: ‘Don’t think of a pink elephant.’ It doesn’t work that way.” This comment opened up a larger conversation about why bias doesn’t simply disappear when we tell an AI not to use it since the model has already been trained on patterns that are shaped by race, class, gender, ability, and other structural factors.

Instead of relying on blanket instructions like “ignore this,” educators learned that effective bias mitigation requires adding context, not removing it, and giving the model very explicit constraints. Several teachers noted that being “extremely strict” with their prompts, defining what counts as fair evaluation, specifying what information should not influence the output, and outlining the reasoning process the AI must follow improved results. Others found that completely omitting sensitive context that can induce bias, such as race, socioeconomic class, location, gender, etc., it was often more reliable to direct the AI in how to ethically handle them: for example, asking it to generate feedback that is identity-affirming and asset-based.

Through these discussions, educators began to see bias mitigation not as a single command, but as an intentional design practice that mirrors the equity work they already do in their teaching.

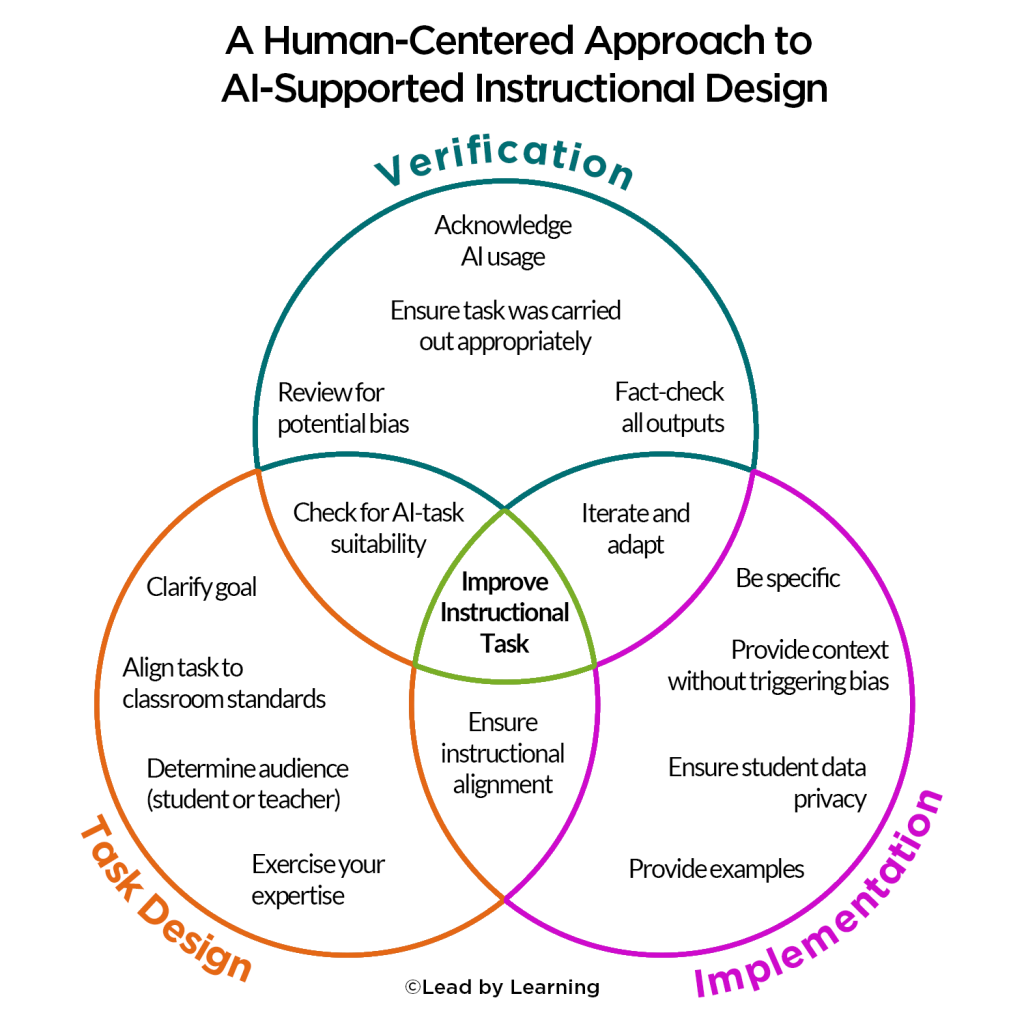

As these themes emerged, the Lead by Learning team began synthesizing what we were hearing into a coherent, human-centered framework for the safe and effective use of AI in the classroom. Rather than imposing a top-down set of rules, the framework grew organically from the shifts teachers were making in real time. These educators were defining clear academic purposes for when and why to use AI, developing awareness of its limitations and potential biases, building concrete skills in prompt design and iterative refinement, and consistently verifying output accuracy while safeguarding student privacy.

Through practice and discussion, educators began using more sophisticated prompting strategies such as framing constraints, giving examples, or giving strict definitions of what constitutes fair or equitable reasoning. This not only improved the quality of responses but also deepened teacher understanding of how bias shows up in AI systems and what mitigation looks like in everyday classroom use.

Through practice and discussion, educators began using more sophisticated prompting strategies such as framing constraints, giving examples, or giving strict definitions of what constitutes fair or equitable reasoning. This not only improved the quality of responses but also deepened teacher understanding of how bias shows up in AI systems and what mitigation looks like in everyday classroom use.

As AI Together draws to a close, what stands out most is the collective expertise educators have built in community with one another. Educators can bring their newfound knowledge back to their schools, propagating the knowledge they garnered over the last few months to other educators, strengthening the broader professional community around them. In a world where technology is continuously evolving, these educators now have a durable foundation for navigating it: the ability to think critically, design intentionally, and build classroom environments where AI is used effectively, safely, and ethically. Their learning positions them not just as AI users, but as leaders who can guide their communities through the technological changes for years to come.

Open to secondary Bay Area educators, registration for our Spring AI Together cohort is now available. Learn more and register here.